Alright folks, buckle up, because I've just stumbled across something that genuinely has me buzzing – Google's new Supervised Reinforcement Learning (SRL) framework. And no, I'm not talking about your average incremental update; this feels like a fundamental shift in how we train AI, one that could unlock a new era of collaboration between humans and machines. Forget the doomsaying about AI taking over; SRL is about empowering smaller models to tackle problems previously reserved for the behemoths, paving the way for more accessible, specialized, and frankly, human-relatable AI.

The problem with current AI training, as Google's researchers point out, is that it's often an all-or-nothing game. Reinforcement Learning with Verifiable Rewards (RLVR), the current gold standard, rewards the model only when it gets the final answer right. Think of it like trying to teach a kid to ride a bike by only giving them a cookie if they complete a 10-mile ride on their first try—they might get discouraged and give up! Supervised Fine-Tuning (SFT), where you feed the model a bunch of perfect examples, isn't much better. It's like teaching by rote memorization; the model learns to mimic, not to reason.

The SRL Revolution: Teaching AI to Think, Step-by-Step

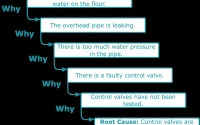

SRL, on the other hand, is like having a patient, insightful tutor who guides the model through each step of the problem-solving process. Instead of just rewarding the final answer, SRL rewards the model for taking the right actions along the way. For a math problem, that might be correctly applying an algebraic manipulation. For a software engineering task, it could be executing the right command in a code repository. It's all about breaking down complex problems into a sequence of logical "actions," giving the model rich learning signals throughout the training process.

This is where it gets really exciting. SRL allows smaller, less resource-intensive models to learn complex reasoning tasks that were previously out of reach. It’s like teaching a small team of specialists to outperform a massive, unwieldy bureaucracy. Think about the implications: instead of relying on massive, centralized AI models, we can create smaller, more specialized AIs tailored to specific tasks, from medical diagnosis to personalized education.

I-Hung Hsu, a research scientist at Google and co-author of the paper, puts it perfectly: "SRL sits in the middle: It captures the structured flexibility of real-world problem solving, where there are multiple valid strategies but also clear notions of what ‘good reasoning’ looks like at each step." This makes SRL ideal for tasks that reward sound intermediate reasoning, not just correct final answers. It isn't just about the destination, but the journey.

When I read about the results of their experiments, I honestly had to take a moment. In math reasoning benchmarks, SRL-trained models outperformed those trained with SFT and RLVR by a significant margin. But the real breakthrough came in agentic software engineering, where an SRL-trained model achieved a 74% relative improvement over an SFT-based model. That's not just incremental progress; that's a paradigm shift! Google’s new AI training method helps small models tackle complex reasoning. That's not just incremental progress; that's a paradigm shift!

Now, I know what some of you might be thinking: "Okay, Aris, this all sounds great, but what about the ethical implications?" And that's a fair question. As we empower AI with more sophisticated reasoning abilities, we need to be mindful of the potential for misuse. We need to ensure that these AI systems are aligned with human values and that they are used to augment, not replace, human intelligence. The power to teach AI comes with the responsibility to teach it well.

But here's where I see the real potential: SRL could usher in a new era of human-machine collaboration. Imagine AI assistants that don't just follow instructions but actually understand the reasoning behind them. Imagine AI tutors that can adapt to your individual learning style and provide personalized feedback at every step. Imagine AI collaborators that can help us solve some of the world's most pressing challenges, from climate change to disease eradication.

And it's not just me who's excited about this. I've been following the online discussions, and the sentiment is overwhelmingly positive. One commenter on Reddit put it this way: "This is the kind of breakthrough that could finally bridge the gap between AI hype and AI reality." Another wrote: "SRL could be the key to unlocking the true potential of AI for good." These are just snippets, but they show a real sense of collective anticipation.

AI: Not a Threat, But a Partner

The beauty of SRL is that it's not about creating super-intelligent machines that surpass human capabilities. It's about creating AI systems that can work alongside us, augmenting our intelligence and amplifying our problem-solving abilities. It's about building a future where humans and machines collaborate to create a better world for all. The potential here feels as revolutionary as the printing press—a new way to disseminate knowledge and amplify human potential.